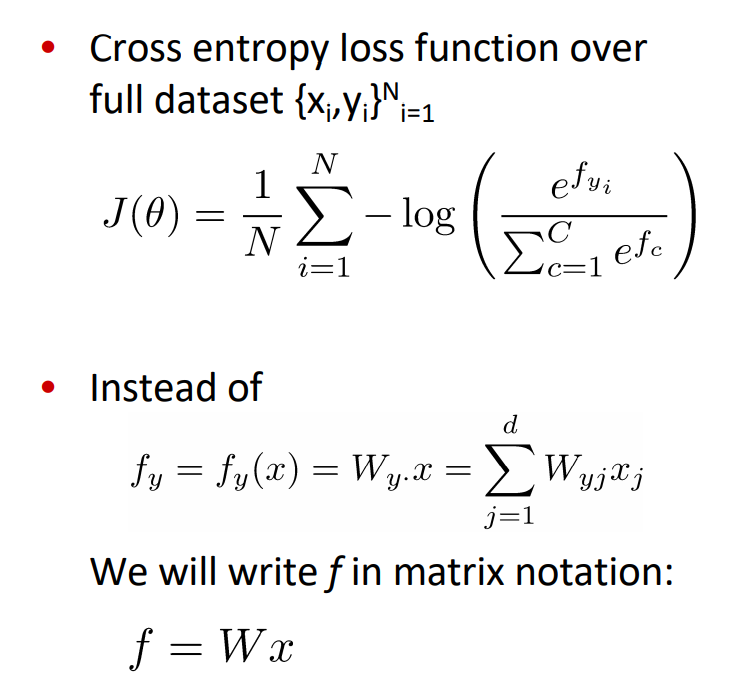

W are weights (parameter that needs to be adjust)

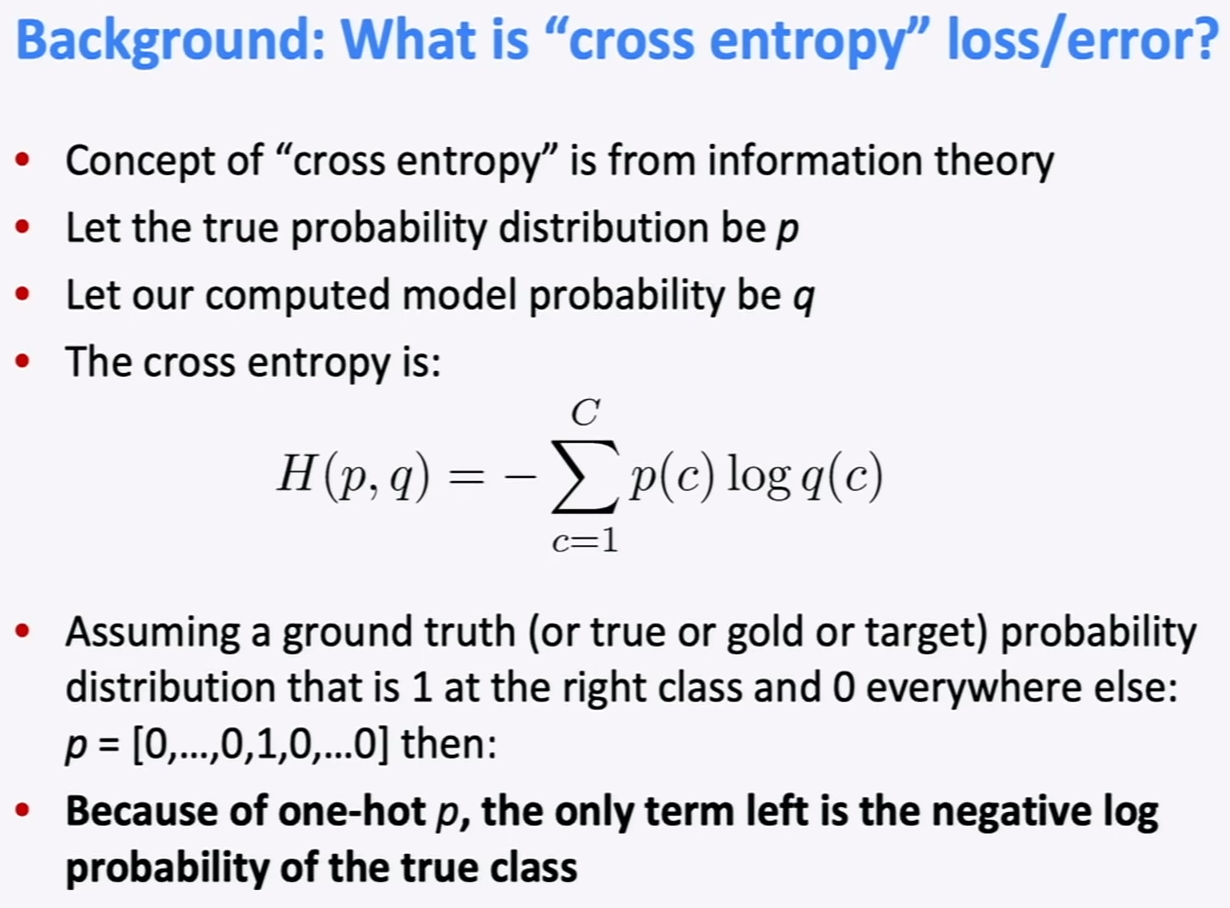

for full dataset we assume that p is 1.

controlling theta is same as moving line from right picture we want to minmize loss.

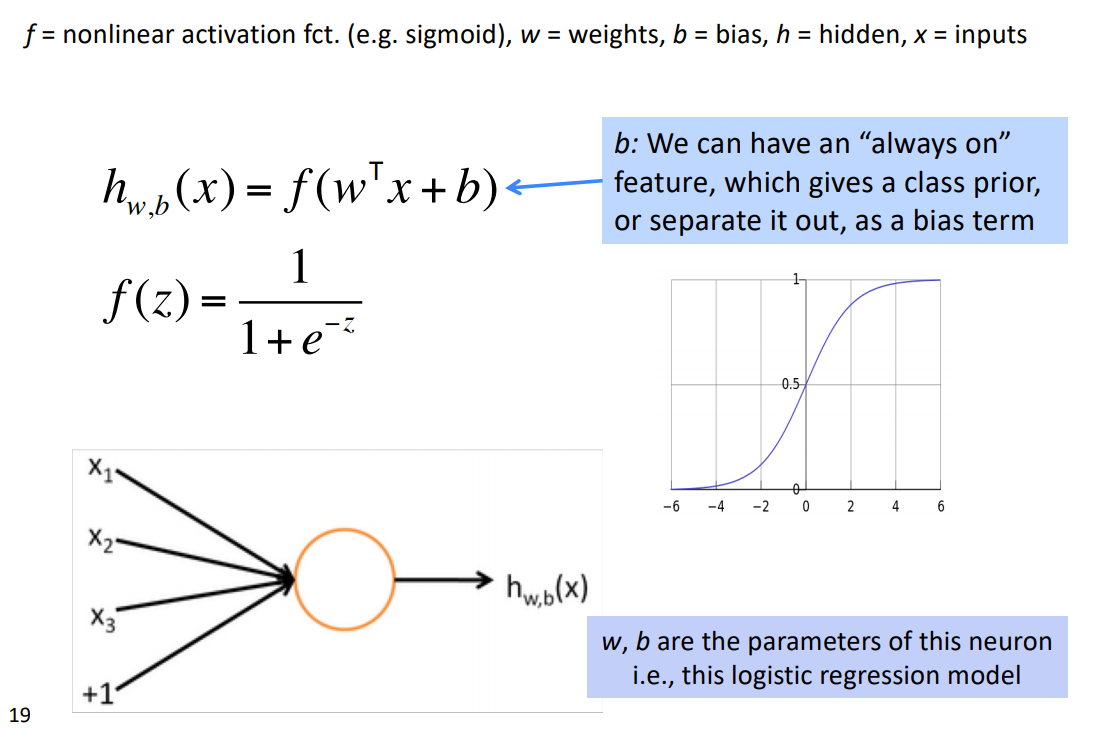

Softmax alone are not very powerful since it is linear we need some nonlinearity.

we learn word representation and parameter W at the same time.

sames as binary logistic regression unit == one neuron

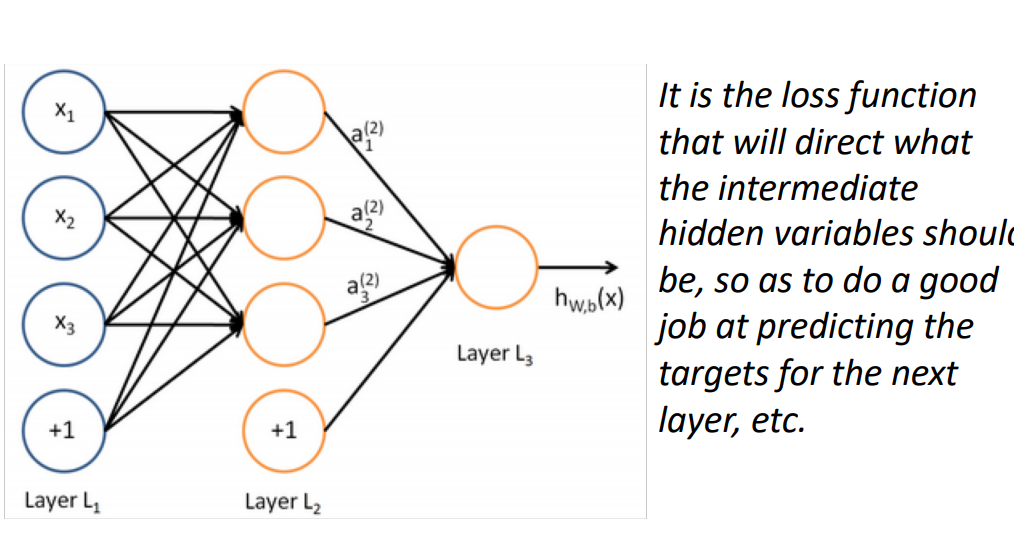

we don't want to decide ahead of time what those one logistic regression are trying to capture we want the neural network to be self-organized (learn something useful)

like supervised learning we have our wanted result at the end.

now we compare prediction and result and mimize (Cross_Entropy) loss with traning since layer L2 is not actual data it will keep on change in a good way.

deep learning == putting more layers L3 L4 ...

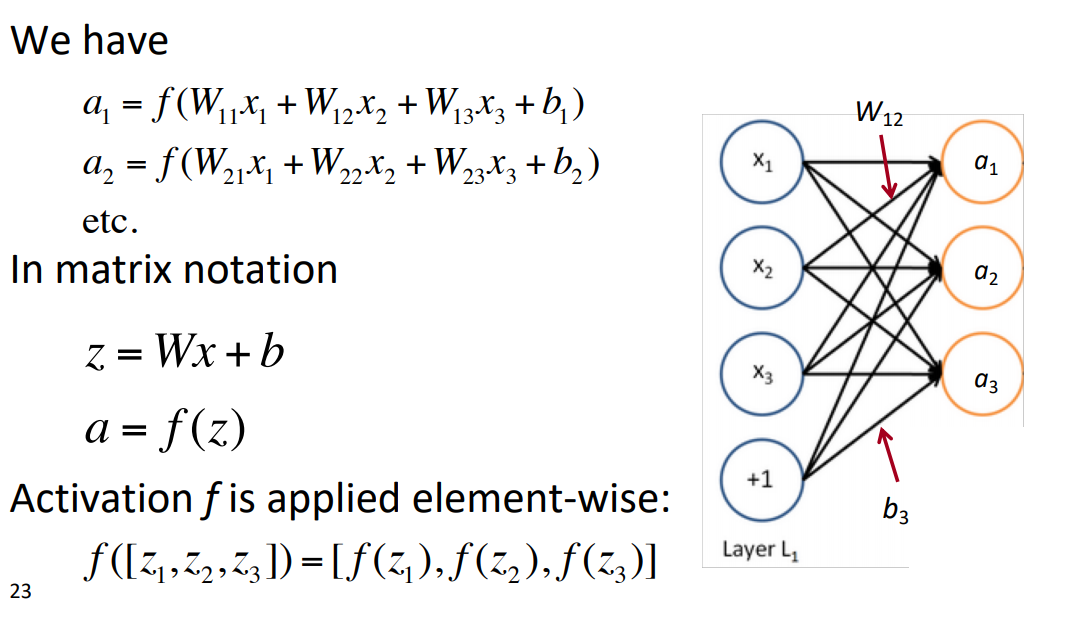

way to write in matrix form in math notation. f is non-linearity (Activation function)

Named Entity Recognition(NER)

find and classify names in text like "People" , "location" etc.. but one word can have lot of meaning in different context. so we use window method to gain infomation in context form

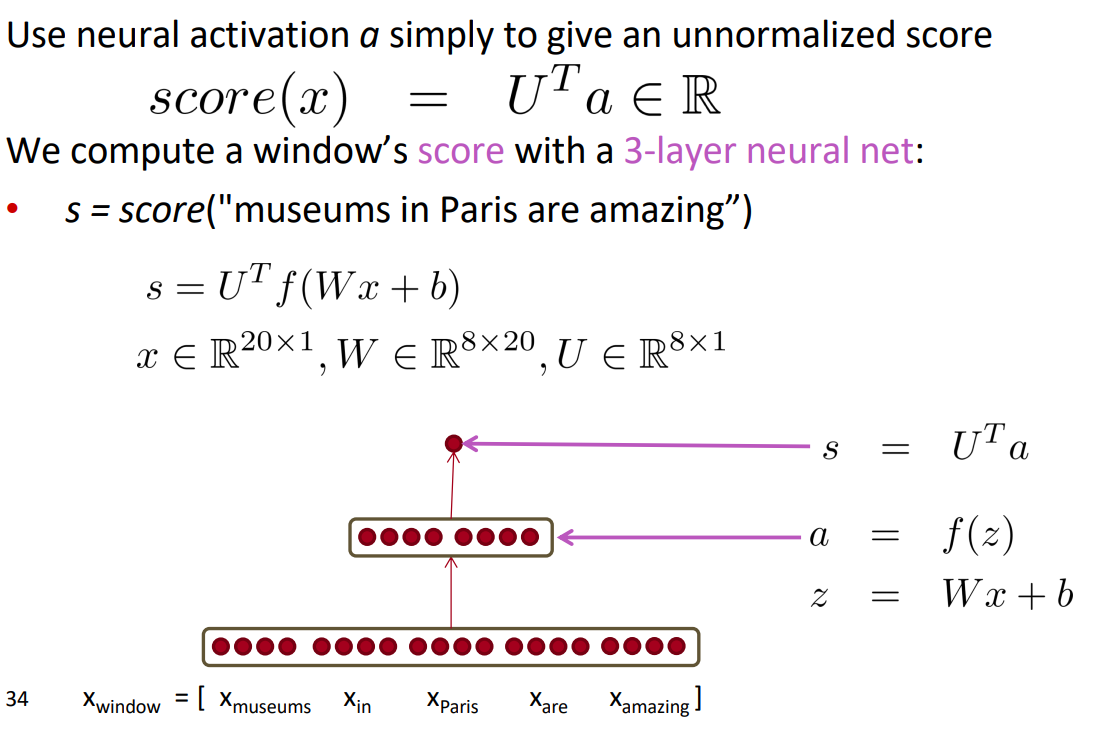

trying to predict wether Paris is "location" . we want to minimize diff from (predicted) Score to real entity. we use SGD to train.

each word is represented in 4-Dimension vector. so first layer is 20 x 1 since we concatnate all the vector in window

and we want to reduce them to 8-Dimension we use W (hidden layer) size 8 * 20

and finally we use U to make it scalar

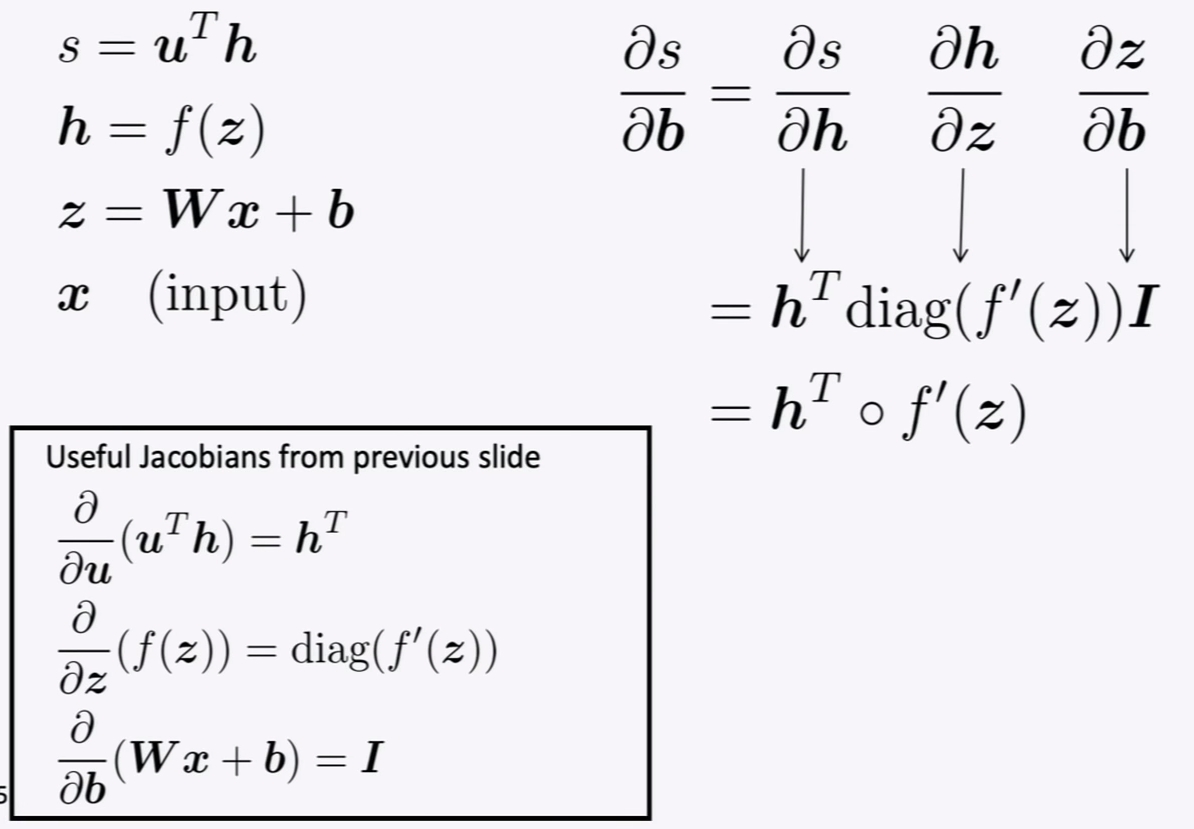

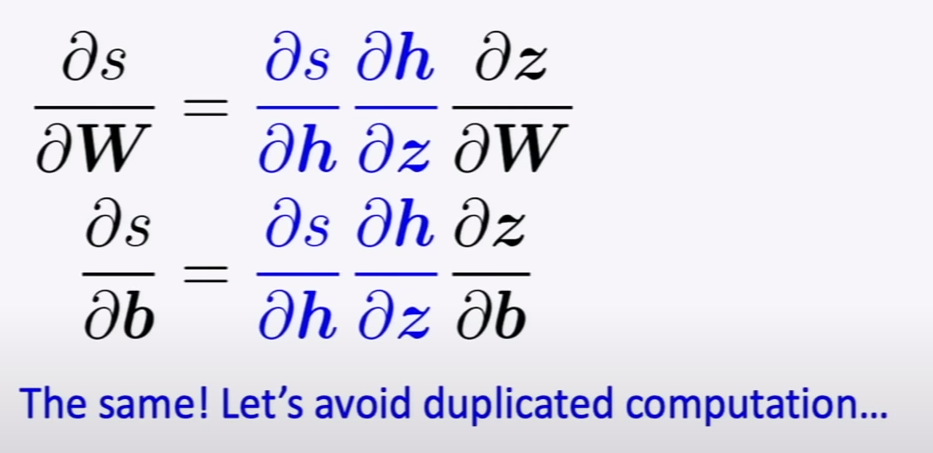

we are calculating how much score changes for some difference of bias.

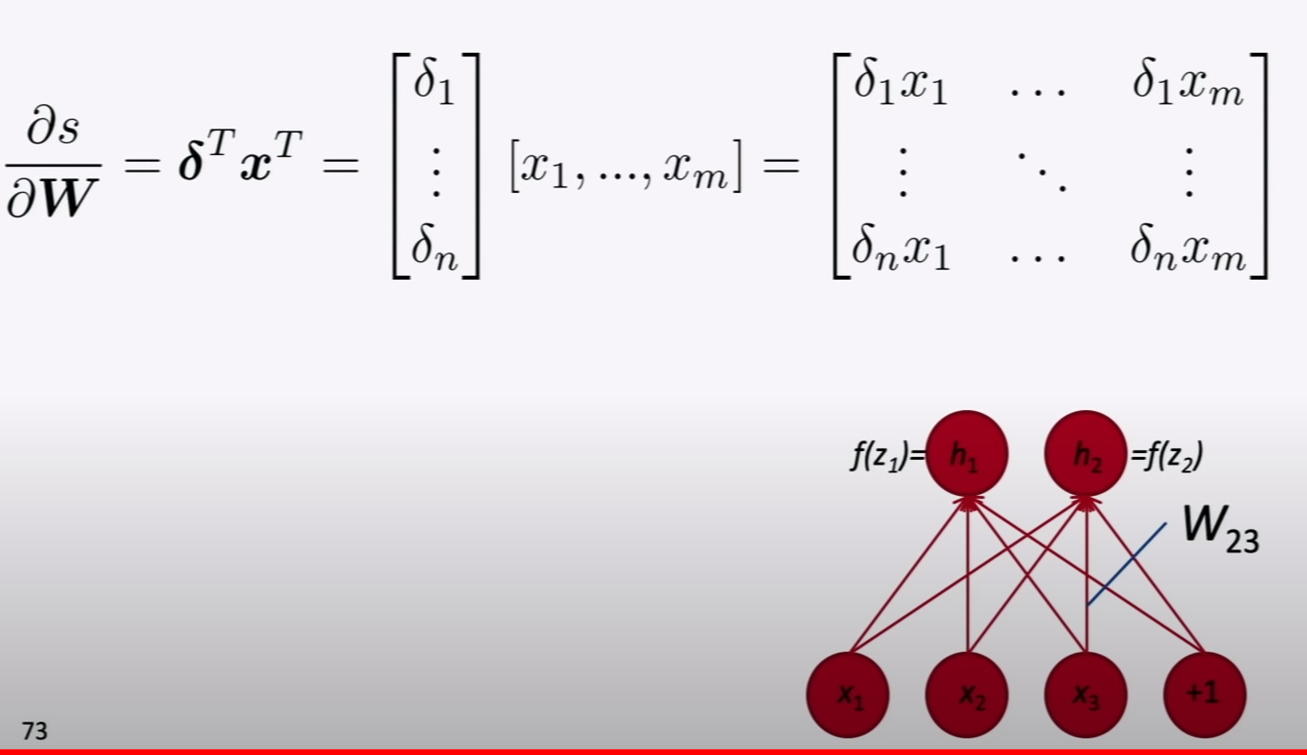

delta refers to difference from above layer.

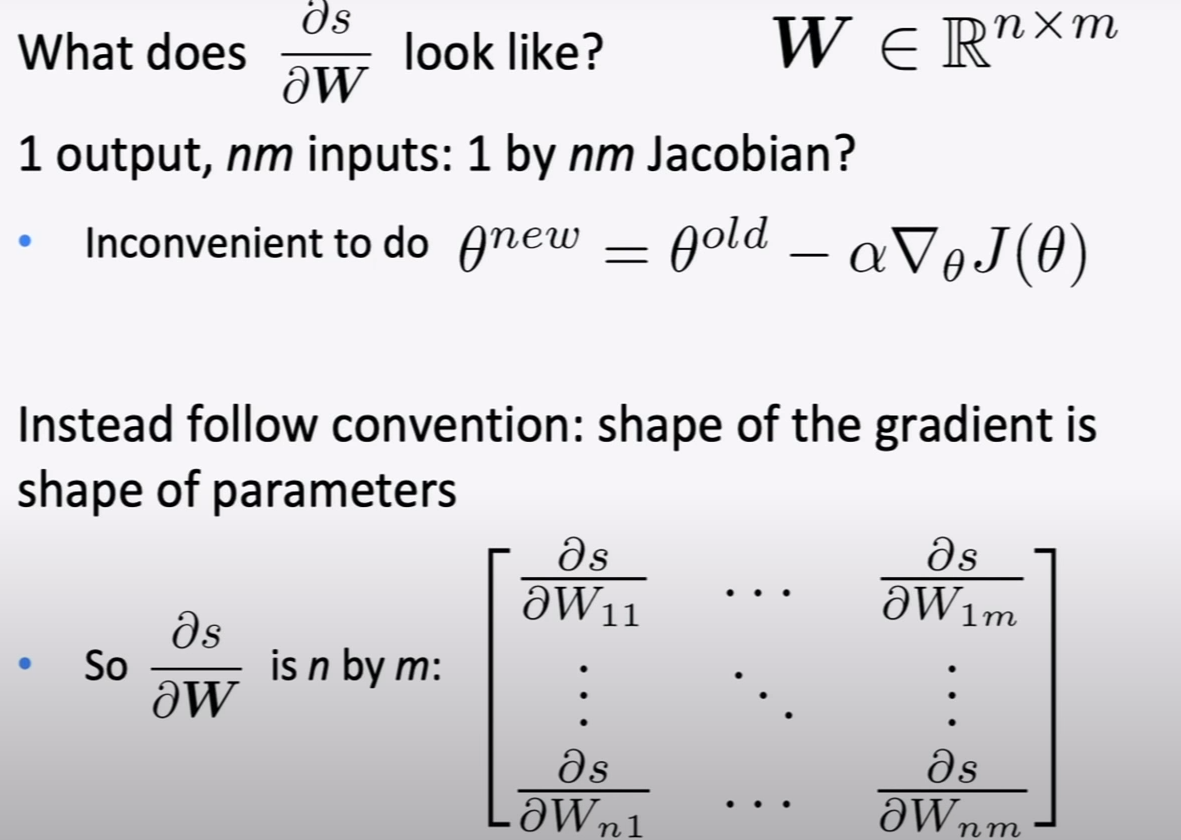

we make out jacobian into matrix form so we can calculate easily.

because Z = WX + b and with dw we have just X left.

every combination(line) correspond to W's element.

'AI > NLP (cs224n)' 카테고리의 다른 글

| Lec6) Language Models and RNNs (0) | 2021.04.27 |

|---|---|

| Lec5) Dependency Parsing , Optimizer(GD to ADAM) (0) | 2021.04.26 |

| Lec4) NLP with Deep Learning (0) | 2021.04.13 |

| Lec2) Word Vectors and Word Senses (0) | 2021.04.06 |

| Lec1) introduction and Word vectors (0) | 2021.04.05 |