we want to some how see image like left above , and find the shortest path and output that path with image like right above or how long is shortest path

h,v = parameters(theta) things we cal when backprop

problem is how we are gonna backpropagte since "fu" is for determing some property of shortest path ,for example getting distance of shortest path, but "hv" is about extracting input graph to the dijkstra algorithm from the image

and organe circle is discrete process since path are not going to change until some big disturbence(threshold) occur

this paper shows how we can do backprop even with discrete process inside our model

paper's claim

1. propose maximum likelihood estimation as a framework for computing gradient with respect to the parameters of discrete exponential family distribution(middle orange circle)

2. show that this framework is useful for backpropagating gradients through both discrete probability distributions and discrete combinatorial optimization problem (shortest path)

3. ..?

4,5 = our thing is good

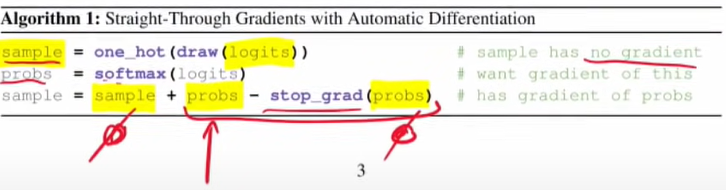

striaght through estimator (STE)

at first NN, we sample some distribution and we sample one of that class and put it into second NN

but backpropagating thorugh the sampling procedure is hard (2nd NN to distribution)

h= histogram , s=most likely state (argmax of h)

at forward path , stop gradient has no effect "h - sg(h)" == 0 and at this back propagation only delta"h" is left

acting like we never sampled , and got ouput from distribution "h"

forward propgation = sample , it has no gradient

stop_grad(probs) = no gradient

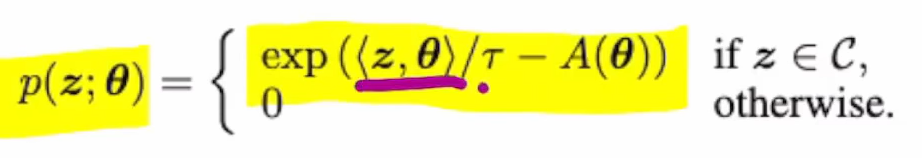

z = one,zero vector for (in this example a path) , C= constraint set

theta = a value for certain path (edge) which is vector and has minus value

<z,theta> = inner product , and we are trying to find that maxmize this

t = temperature , A(theta) = normailzation

p is solution space for our dijkstra algorithm , a distribution that would assign a high value (likelihood) to paths that are very short in the graph that is defined by theta , low value to that are very long in the same theta(graph)

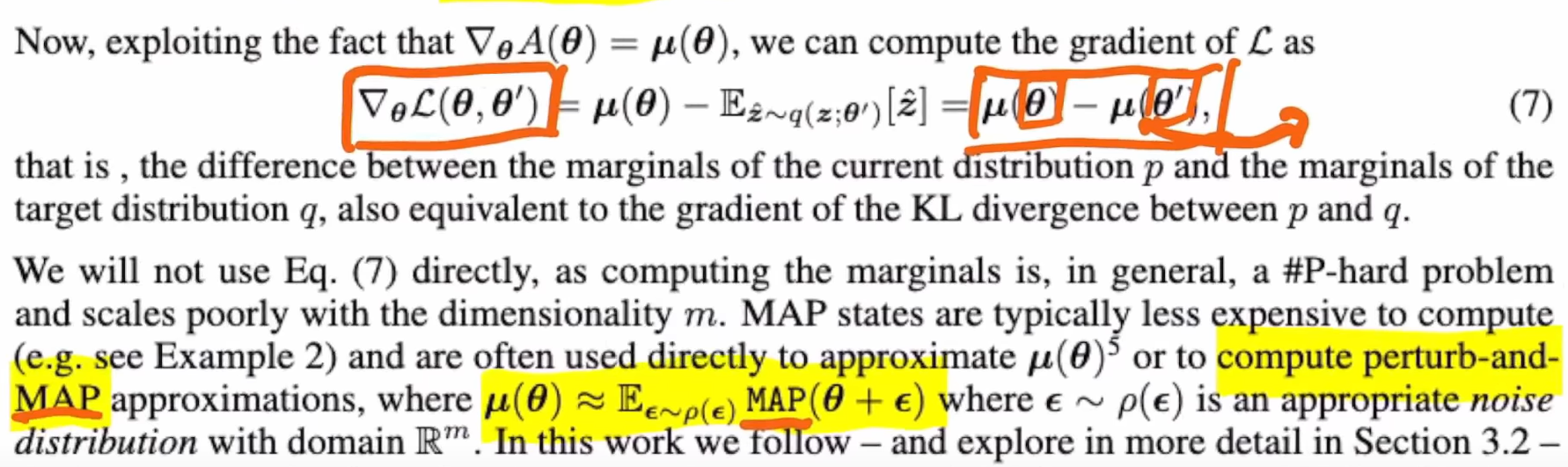

we need gradient for theta so we can cal gradient for "v".

theta is sort of like adjacent graph(output of first NN) and when we are trying to backprop things from "z" we are trying to find shorter path by changing adjacent graph (close to dijkstra shortest path)

we would like to find a "q" target distribution that always has lower Loss function value

given crappy graph(theta) we can still do GD and get little more better graph => which is same as heading to get optimal target distriubtion q (which is valid graph space for dijkstra)

new objective

1. what would be better input for discrete solver (we want q)

2. can we make our input that we received more like the input to discrete solver (how can we make p to q)

this Loss is for q - p and we would like those 2 distribution to get close by adjusting p with GD

and this Loss cannot be differentiate

we cannot cal mean of distribution theta so we do MAP(most likely state) of theta = trick

we cal mean by pertubing edge's value (like monte carlo) and approximate paths that is often (mean) in this graph space distribution

summary

in backward = cal problem,graph (theta) that would make down stream NN life easier , want target distribution(q) , get surrogate Loss => make forward to produce better problem, graph(theta)

'AI > Yannic Kilcher' 카테고리의 다른 글

| BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding (0) | 2021.11.21 |

|---|---|

| Attention is All You Need (0) | 2021.11.21 |