History

EC2 = rent bunch of virutal machines in a single physical node + locally attached storage (hard drive for virtual machine guest)

for webserver ,since it is state less , it is okay if ec2 instances go down client can just fire up new webserver

but for db if the ec2 goes down there is no way to access that attached storage -> AWS provides S3 to store snapshot of DB but between snapshot are still vulnerable

EBS (Elastic Block Store)

pair of EBS server exist -> DB send write to EBS and chain replication happen -> last EBS sends response to DB

now if DB EC2 goes down we can just fire up new EC2 + DB and attache to EBS

only one EC2 can mount to one EBS (not sharable)

EBS pair is stored in same database center for performance so if both of them fail no way to recover

DB writes use network to send data to EBS -> network overload happens

DB tutorial - transaction , crash recovery

before actually commiting to disk -> log must be wrriten -> DB cache update to 510,740 -> disks get updated (wrriten log can be reused, don't have to write again)

each log entiry have it's transaction id -> recovery software can know what transaction need to be undone

RDS (Relational Database Service)

seperate available zone (different database center) -> more fault tolerance

but still using network -> expensive and slow

Aurora

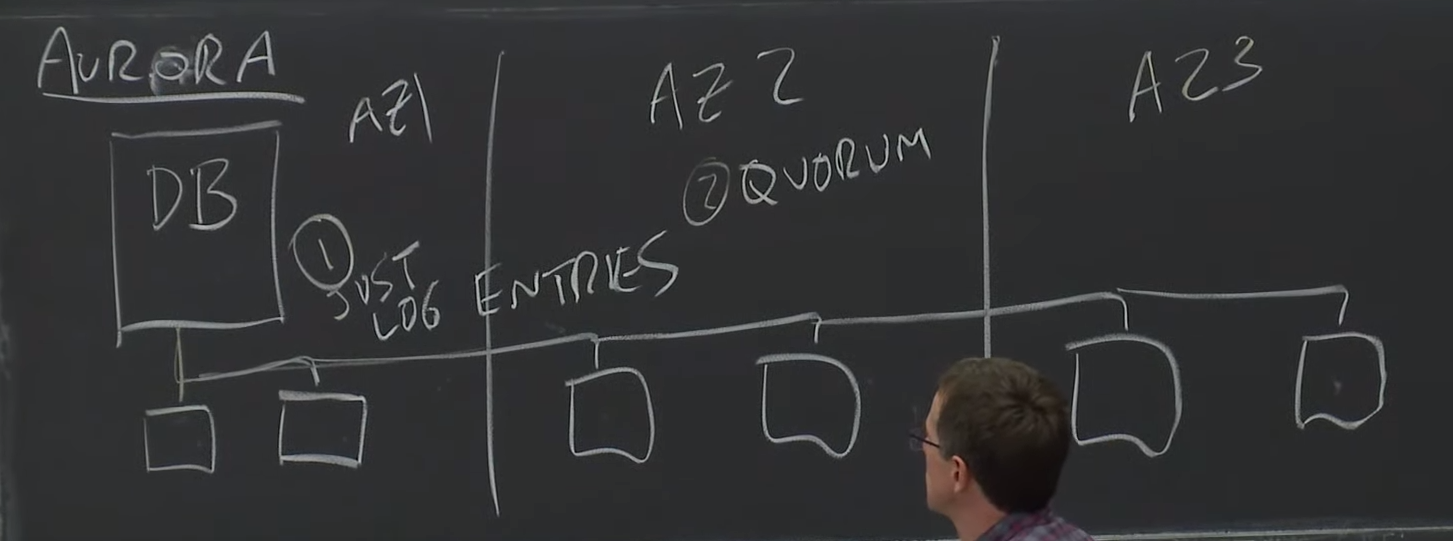

3 available zone , 6 replication -> super tolerence

1. only sends log entries not whole write data -> faster than RDS

2. use Quorum

3. read only replica DB

fault tolerence goals for Aurora

1. want to write even with 1 dead AZ

2. want to read even with 1 dead AZ + 1 server dead

3. transient slow???

4. fast replication generation if 1 server fails

Qourum replciation

total N replicas

if we want to write -> W(number)<N need to be recognized by other replicas

same for Read(R<N, number)

R+W = N+1 -> read and write server needs to have at least 1 overlap server

version exist when client attempt to read and see 2 Servers( S2, S3) have different version client will read highest(latest) versioned data

do not need to wait for slow or dead servers client just to wait for threshold of servers

we can make read faster or write faster by choosing number(R,W)

storage servers (6 of them)

storage server will have old data pages and log entries will get attach to that old pages

when db evicts data pages from cache and needs to read again

db sends read -> SS need to update the old pages -> storage server will apply the logs entries

what if we need storage server that needs very big data storage -> split to 6 SS

each of PG(protection group) store some fraction of data pages + all the log records that applied to those data pages (subset of log which are relevent to that pages)

each node has different auora instance's data fragment

if one node fail we can recover from getting data from all the other PG node

reads happens more than write

Aurora has read replica db , but only 1 write db

read replica can cache data -> write db sends log to read replica -> to update cache data pages

'Database > Distributed Systems' 카테고리의 다른 글

| Lecture 12: Distributed Transactions (0) | 2022.04.04 |

|---|---|

| Lecture 11: Cache Consistency: Frangipani (0) | 2022.03.31 |

| Lecture 8: Zookeeper , More Replication, CRAQ (0) | 2022.03.05 |

| Lecture 6: Fault Tolerance: Raft (1) ,(2) (0) | 2022.02.26 |

| Lecture 4: Primary-Backup Replication (0) | 2022.02.11 |