Lecture 4: Theoretical Fund. of Dynamic Programming Algorithms

Contraction mapping

we take a sequence that is convergent in that space , apply Transfomation (T) to that sequence, we get another convergence sequence that covnerges to T of x.

which is limit of that sequence

fixed point

if apply T trasnform to x it goes back to original x

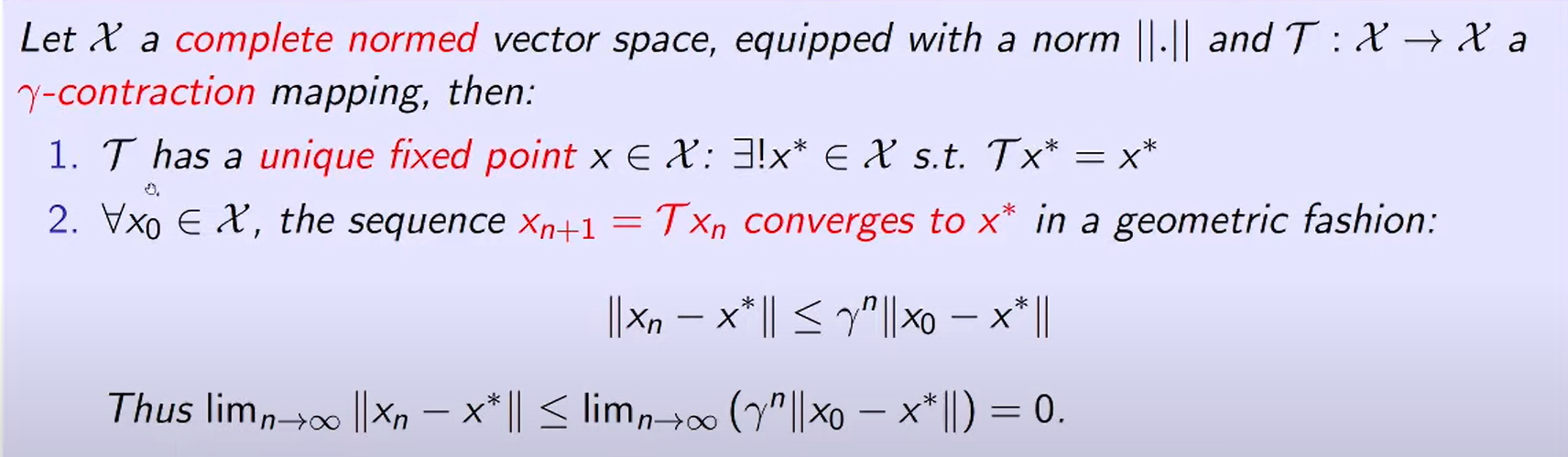

Banach Fixed Point Theorem

we can know that sequence is convergent to unique fixed point X star

The Bellman Optimality Operator

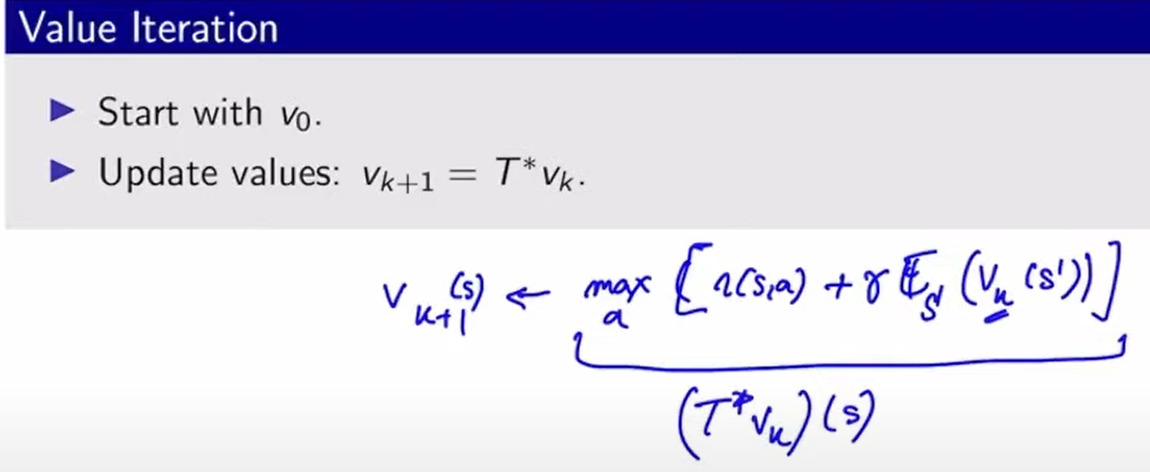

Value Iteration through th elens of the bellman operator

value iteration always converge as long as gamma is less than 1

Approximate Dynamic Programming

we have assumed prefect knowledge of the MDP and perfect / exact representation of the value funcitons

realistically , more often than not

we don't know the underlying MDP

=> sampling / estimation error , as we don't have access to the true operators "T"

we won't be able to represent the value function exactly after each update

=> approximation error , as we approximate the true value functions within a class

Objective = under the above conditions , come up with a policy pie that is close to optimal

A = Approximation ( sample or function approximation)

the case where function approximator might diverge

if gamma is high , even though we chose pretty good "q" it still might be far from optimal case, because our upper bounds are high

if gamm is low ,vice versa